How Music Technology Advance

The Connection between Sound and Silicon Goes Back Centuries

While electronic music production tools may seem a modern phenomenon, the relationship between technology and music actually has ancient roots.

Early musical instruments incorporated materials like wood, bronze, and animal gut, pushing the boundaries of acoustics through innovative mechanical design.

By the 18th century, tech savvy inventors began experimenting with self playing machines and automated instruments. The most famous of these was the 19th century player piano, able to accurately reproduce a performance through punched paper rolls.

These mechanical marvels foreshadowed the digital age, demonstrating technology’s potential to encode, store, and replay sound.

As the Industrial Revolution emerged in the late 19th century, new materials like sheet metal contributed to breakthrough acoustic designs.

Instruments evolved to take advantage of factory production. The modern piano was patented in 1825 using an iron frame for increased string tension and amplified tone.

The Analog Synthesis Revolution Kicks Off the Digital Era:

While technology and music have always been closely intertwined, the true revolution began in the 1950s 70s as electronic synth pioneers explored the frontiers of analog circuitry.

Eager synth designers created entirely new sonic territories beyond acoustic instruments. Innovators like Robert Moog and Don Buchla crafted modular systems allowing infinite sound design possibilities.

Key revolutions in this analog period included voltage controlled oscillators generating pure tones, filters for sculpting timbres, and envelope generators controlling amplitude over time. Artists paired these advanced circuits with radical visual aesthetics to create electronic music as a wholly new artistic medium. Think classic era Kraftwerk, Silver Apples or Tangerine Dream.

In tandem, four track and eight track reel to reel recorders enabled multi track layering well before the digital age.

The Beatles epitomized this approach, as Abbey Road housed one of the first eight track studios fueling their later experimental streak.

Simultaneously in America, music concrete pioneers manipulated found sounds on tape for mesmerizing soundscapes.

By the 1970s, analog miniaturization allowed synths and drum machines to break out of the studio. Portable instruments took electronic music live alongside technologies like the Musikinator, an early polyphonic keyboard.

The underground swept in with artists using these tools to sculpt dub, krautrock, synthpop and more.

Enter Digital and the Daw Revolution:

The 80s digital era saw music creation upended as computing entered homes. Key breakthroughs included the 1982 launch of MIDI specification, allowing digital synchronization between hardware instruments. 1984’s E mu Emulator sampler shattered norms by digitally sampling real instruments.

Equally pivotal was the rise of digital audio workstations (DAWs) like Sound Designer, Pro Tools, Cubase and Logic. DAWs shifted recording into nonlinear, editor based workflows with tape machines dwindling.

Programmers crafted virtual instruments replicating synths and more to be controlled within DAW interfaces.

As computer performance and storage capacity grew exponentially through the 1990s, DAWs dominated. Users could treat audio clips as discrete, editable objects placed into arrangement timelines.

Plugins bringing effects like convolution reverb opened the door for simulated studio ambience anywhere. Music became software driven whether producing hip hop in Fruity Loops or scoring films in Pro Tools.

Home Studios and Digital Distribution Democratize Music Creation:

With affordable computers and multimedia authoring software emerging, anyone could assemble compositions from vast virtual instrument libraries.

This gave rise to waves of bedroom producers and “lo fi” works crafted totally within a laptop. Simultaneously, the music consumption paradigm shifted as MP3 players followed by streaming services took off.

Music access permanently moved to the pocket as smartphone ownership rose. Services like Spotify, Apple Music and YouTube translated into booming revenue supporting independent artists.

Now fans could discover niche genres and follow favorite labels or DJs directly through tailored recommendation algorithms.

Today’s online distribution channels empower millions of creators worldwide to share works. Platforms like Band camp provide direct digital sales while promoting live shows and merchandise to diversify revenue streams.

These marketplaces also facilitate globalized online fan communities forming around shared music interests.

Modern Applications of Music Technology Across Genres:

Technology has blurred genre lines as producers seamlessly fuse electronic and acoustic textures. Urban, trap and future beats often integrate live instrumentation amid software sound design.

Orchestral scores deploy libraries of realistic virtual instruments. DJ sets dynamically remix on the fly in Ableton Live or via hardware samplers.

New gear continually pushes technological boundaries. For example, Roland’s recent JU 06A synthesizer module elegantly captures the sound of a classic but with expanded connectivity. Or KORG’s Volca series allows portability paired with detailed parameter controls for fully analog performance. Top software stays ahead through frequent upgrades, from improved workflows to new virtual instruments.

Digital fabrication technologies also fuel momentum. 3D printing allows modifying commercial instruments or designing unique live controller interfaces.

Open source platforms like Arduino enable DIY electronic projects. Entire synthesizers can be assembled via circuit boards and code. Concurrently, algorithms generate “AI music” raising questions about machine creativity.

Education and Careers Evolve with the Industry:

Continually advancing music technology drives demand for specialists. In response, many colleges established technology focused degrees to prepare the next generation of audio professionals.

Associates and bachelor’s programs cover synthesis, audio engineering, sound design, music business applications and more.

Graduates work in studios, post production houses, game studios, broadcast facilities and more. Roles include engineer, programmer, producer, sound designer, composer and more. Live production utilizes innovative systems to flawlessly execute elaborate shows. Sound designers shape entire virtual worlds in films, AAA games and VR.

DJ software transformed the art form, allowing intricate live sets beyond vinyl capabilities. Mobile applications bring production quality tools anywhere.

Live Streaming concert platforms thrive through the pandemic as fans engage virtually. Clearly technology widens artistic possibilities while expanding accessible distribution models.

Future Directions – AI, Immersive Media, and Beyond:

As technology and creativity advance in lockstep, entirely new industries emerge at the intersection of music and tech. For example, AI and machine learning enable automatic generation, mixing and mastering of tracks with human level quality according to specific styles. Promising algorithmic composition tools assist rather than replace human musicians.

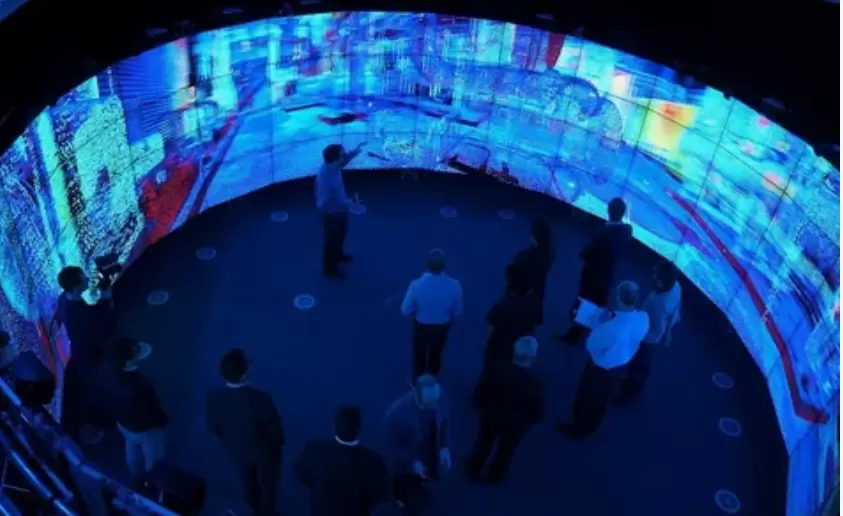

Augmented and virtual reality will deepen audiences’ connection to performances. VR systems can even transport listeners into fully interactive sonic environments.

Meanwhile, spatial audio formats let listeners actively control 3D sound fields across any environment. Bionic implants may one day transmit audio directly into the brain.

Wireless audio networks lay the foundation for shared augmented music experiences. Bluetooth and standards like WiSA allow flawless wireless transmission of multi channel audio from any source. As 5G networks expand, these systems gain bandwidth for streaming immersive formats at high resolutions.

Always evolving tech and musicians’ boundary pushing spirit ensure music and technology’s inextricable link endures as a driver of artistic progress and industry growth.

The partnership established in nascent lo fi studios now helps manifest entirely new artistic paradigms and business opportunities.

Exciting possibilities seem limitless as sound and silicon advance together into the future

FAQ

Q. What technology is used in music?

A. Digital music technology.

Q. What is a music technology course?

A. Learn the basic physics of music and how to make and shape electronic sounds using virtual synthesizers.

Q. Who created music technology?

A. Thomas Edison.

Q. Is Spotify a music technology?

A. Spotify is a leading music streaming platform.

Q. What are the three types of music technology?

A. (1) transducers, (2) electronic instruments, and (3) computer-based devices.

Conclusion

It’s clear that music technology has come an incredibly long way from its early roots as a live oral tradition, to the pioneering recording devices that first captured sound.

Each new innovation from tape machines to synthesizers to digital audio workstations has empowered creators and transformed the listening experience.

While streaming platforms and social media now dominate how audiences enjoy music, new frontiers like AI composition, virtual and augmented reality, and computational analysis promise to further expand the art form.

One certainty is that music brings joy and meaning to our lives, forming powerful bonds between all people. Wherever technologies may take the musical experience in the future, that core human truth will surely remain.