Understanding the Revolutionary Impact of Processing Technology

Data Transformation at the Core of Modern Computing

At its foundation, processing technology involves the manipulation and analysis of digital data through computational algorithms.

Whether it’s a multi billion transistor central processing unit (CPU) or a tiny microcontroller, the core function of all processors remains the transformation of raw information inputs into usable structured outputs.

The process of data transformation underlies almost every aspect of our digital lives.

From supercomputers analyzing particle accelerator experiments seeking insights into quantum mechanics, to mobile phones interpreting touchscreen gestures, to satellites processing terabytes of Earth observation imagery daily the incredible pace of innovation across industries has been fueled by ever advancing processing capabilities.

The astonishing growth of computing power following Moore’s Law has enabled technology to integrate further into our daily activities through intelligent systems, connected devices, and optimized services.

Data Lakes Fuel Breakthrough Discoveries

One area where processing technology has unlocked tremendous potential is through “data lakes” , the vast repositories where organizations aggregate and curate raw digital records. For example, CERN’s Large Hadron Collider generates over 25 petabytes of collision data per year seeking clues about the universe’s origins.

NASA also collects petabytes annually from spacecraft, telescopes and Earth science instruments.

Deep processing algorithms are able to uncover patterns within these gigantic datasets that no human team could analyze alone.

By filtering particle event streams for anomalies or visualizing satellite imagery time lapses to spot environmental changes, computational analyses have accelerated scientific inquiry. Additionally, medical researchers are applying machine learning to process electronic health records of millions of patients.

The aids detection of treatment patterns, risks associated with genetics, and personalized diagnostics bringing new therapies to reality sooner.

As data lake sizes multiply at an exponential rate, processing power must keep pace to extract continuing value.

Future supercomputers aim for exascale speeds thousands of times faster than today to help physicists simulate environments like the early universe, black holes, climate systems and more. The insights gained through processing enormous,

multidimensional datasets promise to push human understanding of science and nature to unprecedented new frontiers.

Behind the Scenes Optimization Across Industries:

While data lakes focus processing capabilities on major scientific and federal initiatives, behind the scenes optimization across industries also relies heavily on computational transformations.

Consider how supply chain logistics planning evaluates petabytes of shipping records, inventory levels and demand forecasts using predictive analytics.

The helps global retailers like Amazon strategically position warehouses, anticipate product gaps before out of stocks occur, and route trucks optimally to lower transport costs.

Likewise, online video platforms leverage terabyte scale encoding farms to compress 4K footage for bandwidth efficient streaming.

Finance companies apply algorithms to process investor transactions, market trends and economic reports to recommend low risk portfolios.

And manufacturing sectors use industrial internet of things (IIoT) sensors generating trillions of operational data points daily analyzed for predictive maintenance insights to avoid costly downtime.

In each of these scenarios, processing technologies refine digital exhaust into intelligence helping businesses run more profitably through enhanced decision making, service quality and productivity.

Data driven operational excellence has become crucial for competitive advantage across all verticals, something that was previously unimaginable before today’s computational capabilities.

Intelligent Assistants and Conversational Systems:

For consumers, some of the most visible processing applications powering our digital tools are intelligent personal assistants like Apple’s Siri, Amazon’s Alexa and Microsoft’s Cortana. Behind innocuous interactions answering questions or controlling smart devices lies tremendous processing power.

Systems must analyze conversational context, search vast databases, and seamlessly coordinate activity across the home network all within milliseconds to feel responsive.

More advanced techniques like natural language understanding (NLU) have emerged that decode not just keywords but nuanced intent, sentiment and context from unstructured human speech.

By processing petabytes of training examples paired with annotated meaning, NLU models constantly learn to better comprehend subtle language variations.

The evolution will eventually bring AI helpers to an unprecedented level of seamless assistance for routine tasks.

Meanwhile in call centers, processing technologies have started automating repetitive customer queries through conversational bots.

By analyzing transcripts of millions of past support chats, systems deduce typical questions and issues to develop conversational workflows.

The redirects basic inquiries away from agents, freeing personnel for more complex cases requiring creativity or empathy.

As language models increase in scale and prowess, intelligent virtual representatives may one day converse indistinguishably from people.

The Unlimited Possibilities Ahead:

Given processing power’s consistent doubling every couple years according to Moore’s Law, the future possibilities for data driven technologies seem limitless.

Some potential applications on the near horizon include genome sequencing at pharmacies to tailor vitamin supplements according to individual microbes,

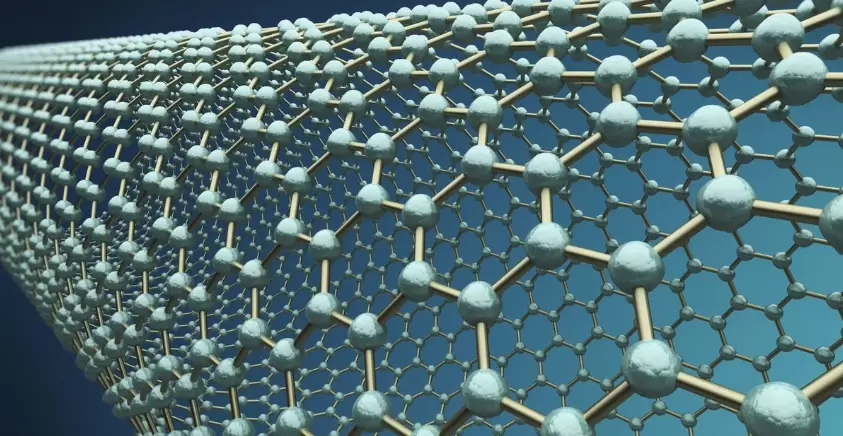

Intelligent tutoring systems personalizing lifelong education paths based on strengths/weaknesses, and quantum processors massively accelerating computational chemistry for next gen materials design.

Longer term, experts speculate technologies like photonic, DNA or carbon nanotube chips could potentially scale processor speed and density to unforeseen levels.

Imagine cloud data centers running on femto watt circuits etched atomically across planetary surfaces solving any conceivable simulation in moments.

With continued innovation transforming petabytes into petathoughts, processing will surely give rise to tomorrow’s world in ways we have yet to imagine.

The digital revolution shows no signs of slowing down as data flows freely fuel revolutionary discovery.

FAQ

Q. What are the examples of process technology?

A. Petroleum refining, mining, milling, power generation, waste and wastewater management.

Q. What are the 5 processes of technology?

A. Collecting, organizing, analyzing, storing and retrieving.

Q. What is an example of a process in everyday life?

A. Getting ready for the day, going to work, coming home, exercising.

Q. What are products of technology?

A. Smartphones, personal computers, virtual reality headsets.

Q. What is an example of a system process?

A. Obtain credit rating for a customer from the credit system.

Conclusion

Processing technology delivers tremendous benefits by transforming raw data into actionable insights.

Its pervasive impacts have revolutionized industries, science and daily life by yielding optimizations, personalized services and new frontiers of discovery.

While artificial intelligence and data mining have mainly augmented human researchers so far, their future is limitless as algorithms grow ever smarter.

With processing power doubling consistently, the pace of digital innovation will surely accelerate catapulting society toward intriguing unknown possibilities that remain unseen over the technological horizon.

Our world will continue transforming dramatically as data flows fuel scientific revolutions, business transformations and quality of life advancements powered by ever evolving computation.